Researchers at TU have made groundbreaking progress in the field of machine learning. In an extensive study, the scientists investigated how the mechanisms of action that enable humans to deepen learned knowledge while asleep can be transferred to artificial neural networks. The research results will help to drastically increase the performance of self-learning machines.

Only a few years ago, sleep researchers were able to prove in experiments that humans learn during the deep sleep phase. The scientists built on an observation they made during the waking phase of humans: The connections between neurons, called synapses, not only actively learn, but also amplify or reduce chemical or electrical signals from the neurons, the nerve cells. Thus, synapses not only transmit signals from neuron to neuron, but also amplify or attenuate their intensity. In this way, the synapses enable the neurons to absorb and adapt to the changing influences of the environment. During sleep, this state of excitation returns to normal and the nervous system is able to process in memory the new information absorbed during the active waking phase and, by forgetting random or unimportant information, consolidate what has been learned while becoming more sensitive to the reception of new information.

Self-regulatory mechanisms of the brain transferred to artificial neuronal networks

Professor Patrick Mäder, head of the Group for Software Engineering for Safety-Critical Systems at the TU Ilmenau, built on this process, which experts call synaptic plasticity: "Synaptic plasticity is responsible for the function and performance of our brain and is thus the basis of learning. If the synapses were to always remain in an activated state, this would ultimately make learning more difficult, as we know from animal experiments. It's the recovery phase during sleep that makes it possible for us to retain what we've learned in memory."

The ability of the synaptic system to react dynamically to a wide variety of stimuli and to keep the nervous system stable and in balance is mimicked by the researchers at TU Ilmenau in artificial neuronal networks. Using so-called synaptic scaling, they transfer the mechanisms that regulate the dynamic brain system to machine learning methods - with the result that the artificial neuronal models behave as effectively as their natural models.

Applications for such highly efficient self-learning machines can be found in medicine, for example, where EEG or tomography examinations are used to detect diseases on the basis of biological data. Other areas of application include the smart grid control of electrical networks or automated laser manufacturing.

The methods developed in the TU Ilmenau study to transfer the brain's self-regulating mechanisms to artificial neural networks were published in the high-ranking journal "IEEE Transactions on Neural Networks and Learning Systems", see https://ieeexplore.ieee.org/document/9337198, and have attracted international attention in the scientific community. Martin Hofmann, PhD student of Prof. Mäder and co-author of the publication, recognizes a major problem with methods borrowed from nature for artificial intelligence applications: the so-called overfitting: "We call it overfitting when a model has memorized certain patterns in the training data, but is not flexible enough to use them to make correct predictions on unknown test data. We are therefore looking for ways to counteract overfitting and instead get closer to the brain's mechanisms of self-regulation."

Numerous mechanisms of action in biology have found their way into the development of learning machines, starting with the first replicas of neuronal networks. The new findings of TU Ilmenau open up additional fascinating possibilities for deep learning, i.e. highly efficient machine learning.

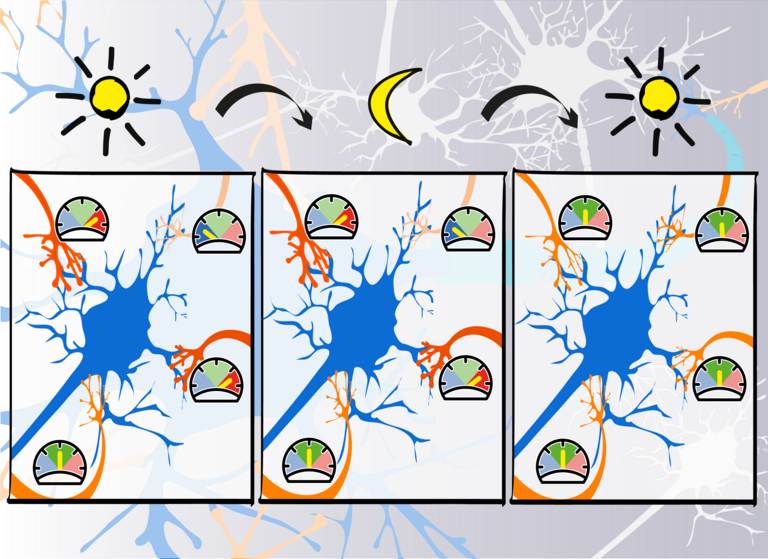

Simplified model of learning biological neurons. At the end of an awake period (left figure), synaptic connections to neurons are strengthened or weakened according to short-term learning. Learned stimuli are assessed and adjusted during the sleep period (middle figure), with the corresponding synaptic connections scaled to an intermediate level. The final phase (right figure) shows the adjusted connections at the beginning of the next awake period. This process has now been transferred to artificial neural networks and significantly increases their performance.

Contact

Prof. Patrick Mäder

Head of Group for Software Engineering for Safety-Critical Systems