News

Contact

Univ.-Prof. Dr.-Ing. habil. Kai-Uwe Sattler

President

Tel. +49 3677 69-5001

Ernst-Abbe-Zentrum, Ehrenbergstraße 29

Zi. 3322

Univ.-Prof. Dr.-Ing. habil. Kai-Uwe Sattler

President

Tel. +49 3677 69-5001

Ernst-Abbe-Zentrum, Ehrenbergstraße 29

Zi. 3322

You can also find out news from the field via our Twitter channel: https://twitter.com/avt_imt

Ashutosh Singla; Steve Göring; Dominik Keller; Rakesh Rao Ramachandra Rao; Stephan Fremerey; Alexander Raake

Assessment of the Simulator Sickness Questionnaire for Omnidirectional Videos

Virtual Reality/360° videos provide an immersive experience to users. Besides this, 360° videos may lead to an undesirable effect when consumed with Head-Mounted Displays (HMDs), referred to as simulator sickness/cybersickness. The Simulator Sickness Questionnaire (SSQ) is the most widely used questionnaire for the assessment of simulator sickness. Since the SSQ with its 16 questions was not designed for 360° video related studies, our research hypothesis in this paper was that it may be simplified to enable more efficient testing for 360° video. Hence, we evaluate the SSQ to reduce the number of questions asked from subjects, based on six different previously conducted studies. We derive the reduced set of questions from the SSQ using Principal Component Analysis (PCA) for each test. Pearson Correlation is analysed to compare the relation of all obtained reduced questionnaires as well as two further variants of SSQ reported in the literature, namely Virtual Reality Sickness Questionnaire (VRSQ) and Cybersickness Questionnaire (CSQ). Our analysis suggests that a reduced questionnaire with 9 out of 16 questions yields the best agreement with the initial SSQ, with less than 44% of the initial questions. Exploratory Factor Analysis (EFA) shows that the nine symptom-related attributes determined as relevant by PCA also appear to be sufficient to represent the three dimensions resulting from EFA, namely, Uneasiness, Visual Discomfort and Loss of Balance. The simplified version of the SSQ has the potential to be more efficiently used than the initial SSQ for 360° video by focusing on the questions that are most relevant for individuals, shortening the required testing time.

IEEE Conference on Virtual Reality and 3D User Interfaces (VR) 2021

TU Ilmenau | Eckhardt Schön

TU Ilmenau | Eckhardt SchönAt the constituent meeting of the newly elected Institute Council on June 23, 2021, Prof. Alexander Raake (Audiovisual Technology Group) was elected as the new Director of the Institute of Media Technology (IMT).

Jun.-Prof. Dr. Matthias Hirth (User-Centered Analysis of Multimedia Data Group) was elected as his deputy.

The CO-HUMANICS project (Co-presence of people and interactive companions for seniors) started on June 1, 2021. Five TU Ilmenau groups are involved in this project, which is funded by the Carl Zeiss Foundation with 4.5 million euros. A total of eleven new employees will work on the project.

As a member of the project team, M.SC. Luljeta Sinani started her work in the group.

In spring 2021, the realization of the ILMETA large-scale facility was started by the group, in which the first orders will be triggered.

At the beginning of 2020, the German Research Foundation (DFG) positively reviewed the application of the department for co-financing of the ILMETA large-scale facility. The DFG and the Free State of Thuringia then provide a total of € 570,000 for the realization of this infrastructure project.

Steve Göring, Rakesh Rao Ramachandra Rao, Bernhard Feiten, and

Alexander Raake

"Modular Framework and Instances of Pixel-based Video

Quality Models for UHD-1/4K."

IEEE Access. vol. 9. 2021.

(https://ieeexplore.ieee.org/document/9355144)

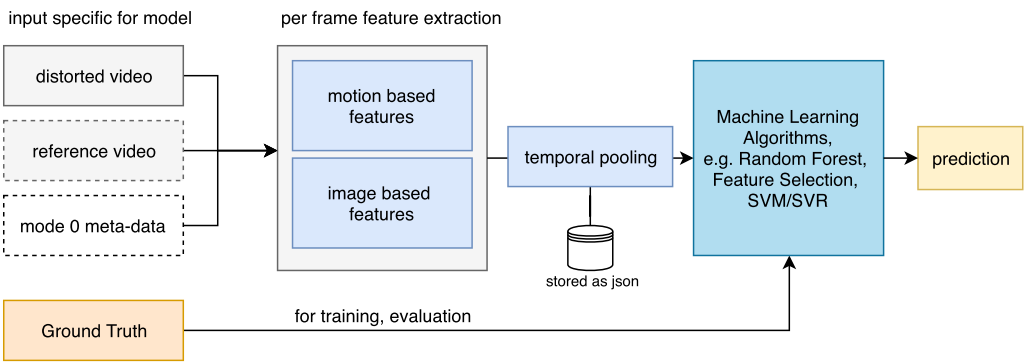

The popularity of video on-demand streaming services increased tremendously over the last years. Most services use http-based adaptive video streaming methods. Today’s movies and TV shows are typically recorded in UHD-1/4K and streamed using settings attuned to the end-device and current network conditions. Video quality prediction models can be used to perform an extensive analysis of video codec settings to ensure high quality. Hence, we present a framework for the development of pixel-based video quality models. We instantiate four different model variants (hyfr, hyfu, fume and nofu) for short-term video quality estimation targeting various use cases. Our models range from a no-reference video quality model to a full-reference model including hybrid model extensions that incorporate client accessible meta-data.

All models share a similar architecture and the same core features, depending on their mode of operation. Besides traditional mean opinion score prediction, we tackle quality estimation as a classification and multi-output regression problem. Our performance evaluation is based on the publicly available AVT-VQDB-UHD-1 dataset. We further evaluate the introduced center-cropping approach to speed up calculations. Our analysis shows that our hybrid full-reference model (hyfr) performs best, e.g. 0.92 PCC for MOS prediction, followed by the hybrid no-reference model (hyfu), full-reference model (fume) and no-reference model (nofu). We further show that our models outperform popular state-of-the-art models. The introduced features and machine-learning pipeline are publicly available for use by the community for further research and extension.

Recently, the Deutsche Forschungsgemeinschaft (DFG) accepted a submitted project proposal within the DFG priority programme "Auditory Cognition in Interactive Virtual Environments" (AUDICTIVE). The project is being carried out in cooperation with the Chair for Hearing Technology and Acoustics (Prof. Janina Fels, RWTH Aachen) and the Department of Cognitive and Developmental Psychology (Prof. Maria Klatte, TU Kaiserslautern).

Messages from the years 2017 until the 1st half of 2021 (PDF, 2.5 MB)